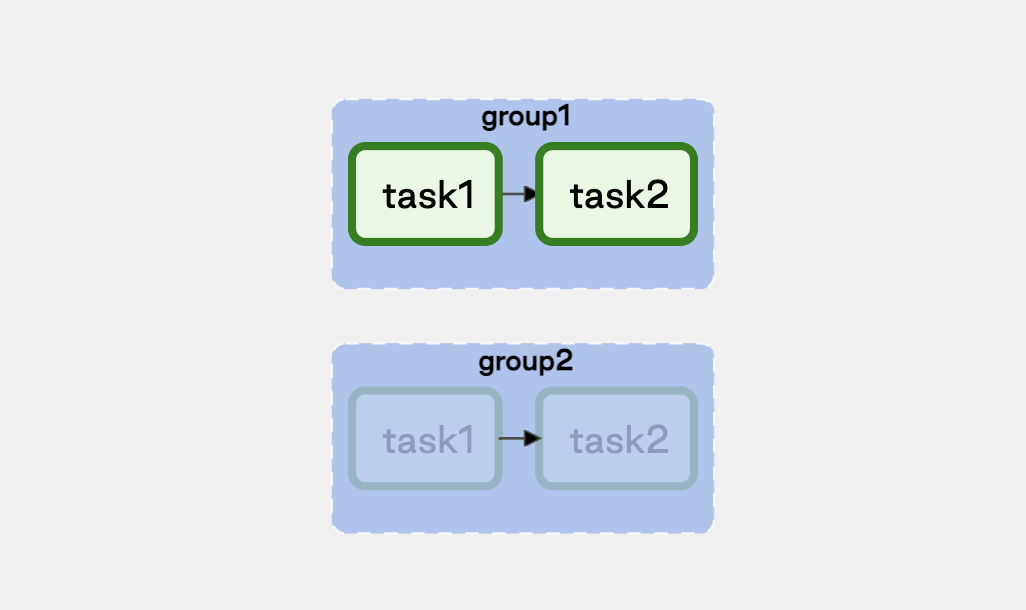

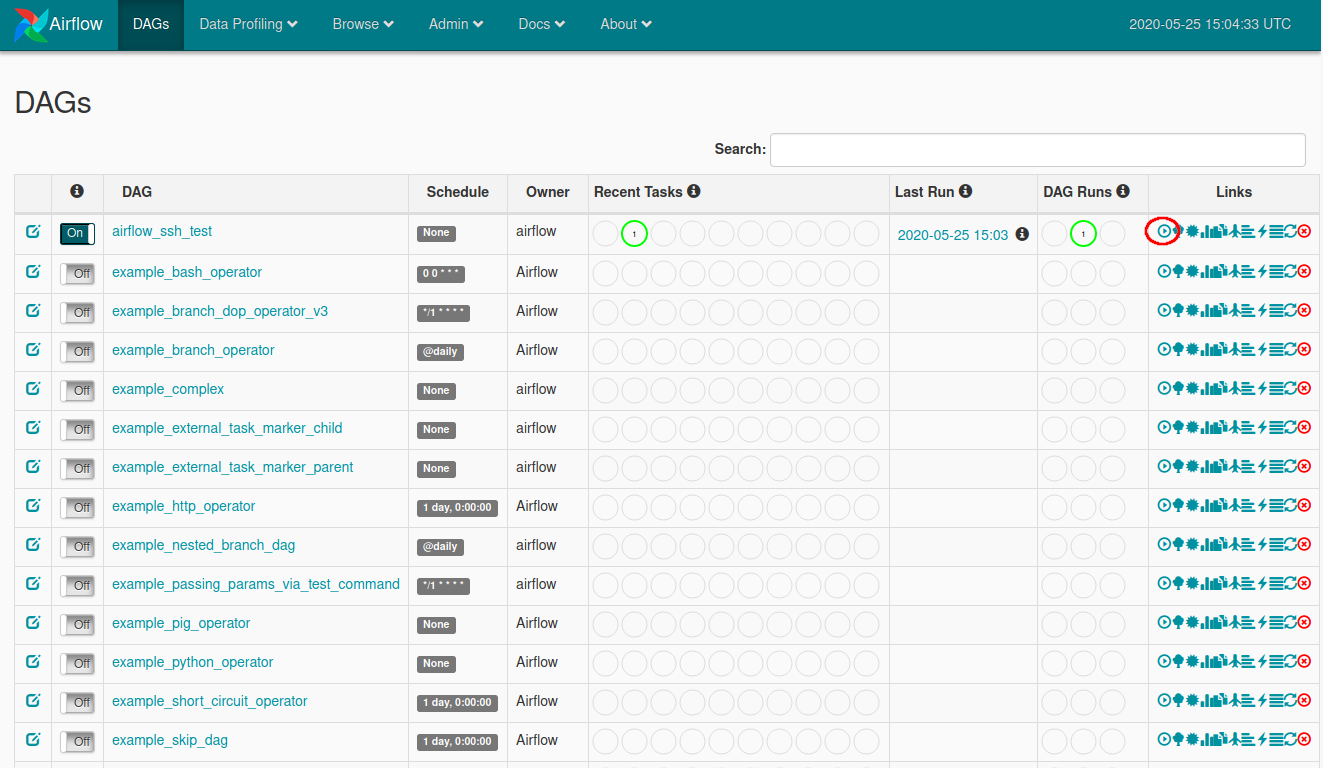

This only partially limits the problems.Ī more efficient workaround exploits the airflow’s ability to retry failed tasks. The first countermeasure is to confine sensors in separate pools. Even when enough slots are available, workers may be hogged by tons of sleeping processes. Too many “sensors” busy waiting may, if not well dimensioned, use all the worker’s slots and bring to starvation and deadlocks (if TaskExternalSensor were used for example). Sensors are a fundamental building block to create pipelines in Airflow however, historically, as they share the Operator’s main execution method, they were (and by default still are) synchronous.īy default, they busy-wait for an event to occur consuming a worker’s slot. Sensors are a special type of Operators designed to wait for an event to occur and then succeed so that their downstream tasks can run. In this article we will discuss sensors and tasks controlling external systems and, in particular, the internals of some of the (relatively) new most interesting features, Reschedule sensors, SmartSensors and Deferrable Operators. transform data, load it, etc.), or “sensors”, a task waiting for some event to happen (i.e. It is based on directed acyclic graphs (DAGs) concept, where all the different steps (tasks) of the data processing (wait for a file, transform it, ingest it, join with other datasets, process it, etc.) are represented as graph’s nodes.Įach node can be either an “operator”, that is a task doing some actual job (i.e. It allows developers to programmatically define and schedule data workflows and monitor them using Python. If your URLs aren't being generated correctly (usually they'll start with instead of the correct hostname), you may need to set the webserver base_url config.Apache Airflow is one of the most used workflow management tools for data pipelines - both AWS and GCP have a managed Airflow solution in addition to other SaaS offerings (notably Astronomer). Like in ingestion, we support a Datahub REST hook and a Kafka-based hook. In order to use this example, you must first configure the Datahub hook. lineage_emission_dag.py - emits lineage using the DatahubEmitterOperator.Note that configuration issues will still throw exceptions.Įmitting lineage via a separate operator

Go and check in Airflow at Admin -> Plugins menu if you can see the Datahub plugin.Learn more about Airflow lineage, including shorthand notation and some automation. For reference, look at the sample DAG in lineage_backend_demo.py, or reference lineage_backend_taskflow_demo.py if you're using the TaskFlow API. Note that configuration issues will still throw exceptions.Ĭonfigure inlets and outlets for your Airflow operators. If set to true, most runtime errors in the lineage backend will be suppressed and will not cause the overall task to fail. If true, the tags field of the DAG will be captured as DataHub tags. If true, the owners field of the DAG will be capture as a DataHub corpuser. The name of the datahub connection you set in step 1. Add your datahub_conn_id and/or cluster to your airflow.cfg file if it is not align with the default values.

0 kommentar(er)

0 kommentar(er)